How to A/B Test and Optimize Your Caller Scripts Using Real-Time Conversation Data

Learn how to apply rigorous A/B testing methods to your caller sales scripts. Use real-time conversation data to continuously optimize performance and boost conversions.

TL;DR

- Treat your call script like a lab experiment: run disciplined A/B tests on one variable at a time, track one outcome, and use conversation intelligence to understand why variants win. Pair this mindset with consistent sales script testing across regions and personas.

- Prioritize the highest-impact lines first (openers and CTAs). Small tweaks compound; industry analysis shows that a single change to the opener can dramatically increase booked meetings.

- Blend quantitative metrics with qualitative reviews. Pair talk/listen ratios, answer rates, and conversion per 100 dials with actual call snippets and objection themes.

- Scale with AI: use artificial intelligence sales tools to auto-transcribe, tag, and coach; then automate lead responses so your speed-to-lead shrinks and pipeline velocity rises.

- Extend experiments beyond live calls. Run A/B tests on different follow up cadences, localize by GEO, and codify how to nurture leads based on what your real-time data proves is working.

When high-velocity outbound is your growth engine, “good enough” scripting is expensive. Cold outreach conversion rates hover in the low single digits, which means a small lift, often from one line, creates outsized ROI. The teams outpacing their peers do two things relentlessly: they run disciplined sales script testing every week, and they operationalize real-time conversation data to adjust in hours, not quarters.

Below is a field-ready playbook you can implement immediately, whether you manage BDRs, lead RevOps, or own the growth number. You’ll learn how to design rigorous experiments, use AI to reveal why a variant performs, and translate call insights into better follow-up flows, localized outreach, and pipeline velocity, all supported by authoritative research and practical templates. You’ll also see where

LeadChaser can help you run tests, systemize changes, and automate lead responses at scale.

Why A/B test caller scripts now?

Because your script is a controllable lever with measurable impact, and even a modest lift compounds across thousands of dials.

Industry data pegs dial-to-meeting rates around a few percentage points, so moving from 2.3% to 3.0% can change your quarter. If you run sales script testing with rigor, you’ll find those gains faster than any list purchase or tech swap.

Harvard Business School Online breaks down A/B tests as simple hypothesis tests: change one element, hold all else constant, and measure one outcome you care about (e.g., meetings booked) so you can trust the results. Start where most calls live or die: the opener and the CTA. Industry write-ups summarizing field data showed that the right opener can deliver outsized increases in meetings, reinforcing that script details aren’t cosmetic; they’re causal.

What is A/B testing for sales calls, exactly?

It’s controlled experimentation. You split a comparable set of prospects between Script A and Script B, change exactly one element (e.g., opener phrasing), and choose one simple outcome metric (e.g., meetings per 100 conversations). That’s sales script testing in practice, and it’s your fastest path to statistically meaningful insight without disrupting the funnel.

- One variable at a time

- One success metric

- One window of time

- Comparable prospect cohorts

Tag each call to a variant and collect both numbers and narratives. Your qualitative review explains why the winner wins.

The metrics that matter right away:

- Meetings booked per 100 dials

- Meetings booked per 100 connections

- Answer rate

- Conversion rate by segment (industry, title, region)

- Talk-to-listen ratio

- Objection types and frequency

- Time-to-next-step after the call

A practical cadence is 50–100 conversations per variant before declaring a winner. That volume reduces noise and improves clarity in your sales script testing. Then, implement the winner and test the next variable.

How to design a rigorous test without disrupting the pipeline

Keep experiments narrow and time-bound. Rotate variants by day, by rep, or by territory so each gets similar exposure. Document your test plan (hypothesis, variable, metric, timeframe) and pre-commit to a decision threshold. This preserves pipeline consistency while enabling confident sales script testing.

- Balance cohorts by geography to avoid time-zone bias

- Normalize by rep (or rotate variants by rep daily)

- Limit to one active test per team at a time

- Lock scripts for the test duration—no mid-run edits

Sample test plan (quick reference)

| Element | Variant A | Variant B | Primary Metric | Volume & Window |

|---|---|---|---|---|

| Opener | Rapport-led question | Value-led statement | Meetings/100 conversations | 100 per variant, 1 week |

| CTA | “15 min this week.” | “15 min next wee.k” | Calendars sent within 5 min | 80 per variant, 1 week |

| Follow-up | Cadence A | Cadence B | Meetings held rate | 2 weeks, equal GEO mix |

Controlling for region, market, and rep variability

Treat geography, industry, and rep skill as stratification factors. Randomize within each stratum. For example, if you target North America and EMEA, allocate equal volumes of Script A and Script B within each region and call during peak local hours.

If you have senior and junior reps, ensure each runs both variants for the same number of conversations. This keeps sales script testing clean while staying GEO-aware.

Where conversation intelligence and AI change the game

A/B testing tells you what works. Conversation intelligence reveals why. With modern speech-to-text achieving high accuracy in ideal conditions, AI can transcribe calls, tag keywords and sentiment, and highlight objection patterns.

That context explains your test outcomes and shows you exactly what to change next. This is where artificial intelligence sales tools go beyond dashboards: they surface coachable moments, pinpoint language patterns that land, and accelerate your next iteration.

Which AI tools to deploy first

- Conversation intelligence with keyword and intent tagging

- Real-time assist for objection handling

- Auto-logging to CRM and pipeline attribution

- Sequence automation to automate lead responses based on call outcomes

Analysts increasingly describe end-to-end “revenue orchestration” stacks that integrate CRM, engagement, and CI, enabling managers to coach with athletic rigor. Choose artificial intelligence sales tools that surface variant-level insights and can automate lead responses the moment a call ends. That tight loop shortens the path from learning to action and reinforces disciplined sales script testing.

What conversation intelligence adds beyond A/B results

- Reveals whether prospects volunteer pain earlier with a value question

- Shows phrases that reduce price objections

- Confirms if talk-to-listen ratio drops below 50%, improving discovery

Teams often learn they’ve been talking 70% of the time. Insert five discovery questions, coach reps to listen, and track conversion. Time after time, the combination of CI plus sales script testing produces compound gains.

What to test first (and why)

- Openers: Compare a rapport-driven opening to a value-led one.

- CTAs: “15 minutes this week” versus “next week,” and calendar link vs rep-assisted scheduling.

- Discovery: Add two industry-specific questions against your baseline.

The biggest results often come from micro-tweaks. Document each result, ship the winner, and queue up the next sales script testing variable.

Use the talk-to-listen ratio as a performance lever

Aim to talk less, learn more. Calls with a healthier balance produce better outcomes because they uncover the real buying context. Use CI to monitor rep talk time by stage and by script variant. If a test pushes reps to ask better questions, you’ll see talk time drop and qualified meetings rise. Feed those insights back into your sales script testing roadmap and into the way you design different follow up cadences.

Data to improve caller success

Blend hard numbers with qualitative notes:

- Meetings per 100 conversations by variant

- Talk-to-listen ratio by stage

- Objection frequency mapped to language patterns

- Time-to-next-step (calendar sent within 5 minutes)

- Follow-up conversion by channel and timing

- Geo/time zone impact on pickup and conversion

Tag objections (“no budget,” “using a competitor”) and tie them to the phrasing that preceded them. This hybrid view grounds your sales script testing in reality and helps you tune different follow up cadences, including decisions on how to nurture leads across segments.

Connect tests to pipeline velocity

Tie every script variant to a follow-up plan and measure not just the meetings set, but the meetings held, the no-show rate, and the stage-to-stage conversion. The fastest path to compounding gains is to extend your experiments into post-call flows, your options for different follow up cadences, and your rules for how to nurture leads, and also to consistently automate lead responses when specific triggers fire.

A/B test different follow-up cadences with intention

Design two or three distinct cadences and tag outcomes. For example:

- Cadence A: Call → SMS in 2 minutes → Email in 10 minutes → Call next business day

- Cadence B: Email first → Call in 15 minutes → SMS after call → LinkedIn touch Day 2

- Cadence C: Call-only for two days → Email+Call on Day 3

Evaluate reply rate, meetings booked, meetings held, and conversion to next stage. Rotate by territory and persona for fairness. Over time, you’ll discover which different follow up cadences work for enterprise vs mid-market or APAC vs North America—and you’ll codify how to nurture leads accordingly.

Where AI fits into follow-up

- Detect call outcomes (no answer, gatekeeper, interest, qualified pain)

- Trigger workflows that automate lead responses in real time

- Personalize messages with transcript snippets

- Choose different follow up cadences by persona, region, or intent

Organizations adopting the right artificial intelligence sales tools compress speed-to-opportunity by minutes and hours, not just seconds. When these systems automate lead responses with smart templates and regional sensitivity, you’ll see tangible gains in both booked and held meetings.

Optimize AI voice and chat scripts using call data

Apply the same experimental logic at scale. If you deploy AI voicebots, run variants of the bot’s opener, discovery prompts, and CTA timing. Let the system auto-dial, tag every conversation, and report performance by variant. Then update the winning script automatically and repeat.

Artificial intelligence sales tools excel here because bots won’t deviate from the test, which keeps your sales script testing data clean, and lets you automate lead responses consistently after each interaction.

Testing different sales dialogues

- Discovery-heavy vs qualification-heavy in the first two minutes

- Two problem-framing narratives tailored by the industry

- Two objection-handling paths for “we already have a tool.”

Set guardrails (time budget, number of questions), run 100 conversations per variant, and analyze with CI to understand the why. Build a library of winning dialogues proven through sales script testing, and deploy them across your stack using artificial intelligence sales tools.

Reinforce with post-call workflows that automate lead responses, and align with your chosen different follow up cadences and playbooks for how to nurture leads.

GEO playbook: localize calling and follow-up without losing control

- Respect time zones and local calling windows to lift answer rates

- Use local area codes for caller ID trust

- Adapt language (“book a demo” vs “schedule a walkthrough”)

- Observe country-specific compliance and opt-in standards

- Account for holidays, fiscal cycles, and norms around directness

Run one variable per region first; when you find a regional winner, port it to another region and retest. Use artificial intelligence sales tools to surface region-specific objections and automate lead responses with localized templates. Then, examine which different follow up cadences perform best by market, and refine how to nurture leads across North America, EMEA, and APAC based on real data.

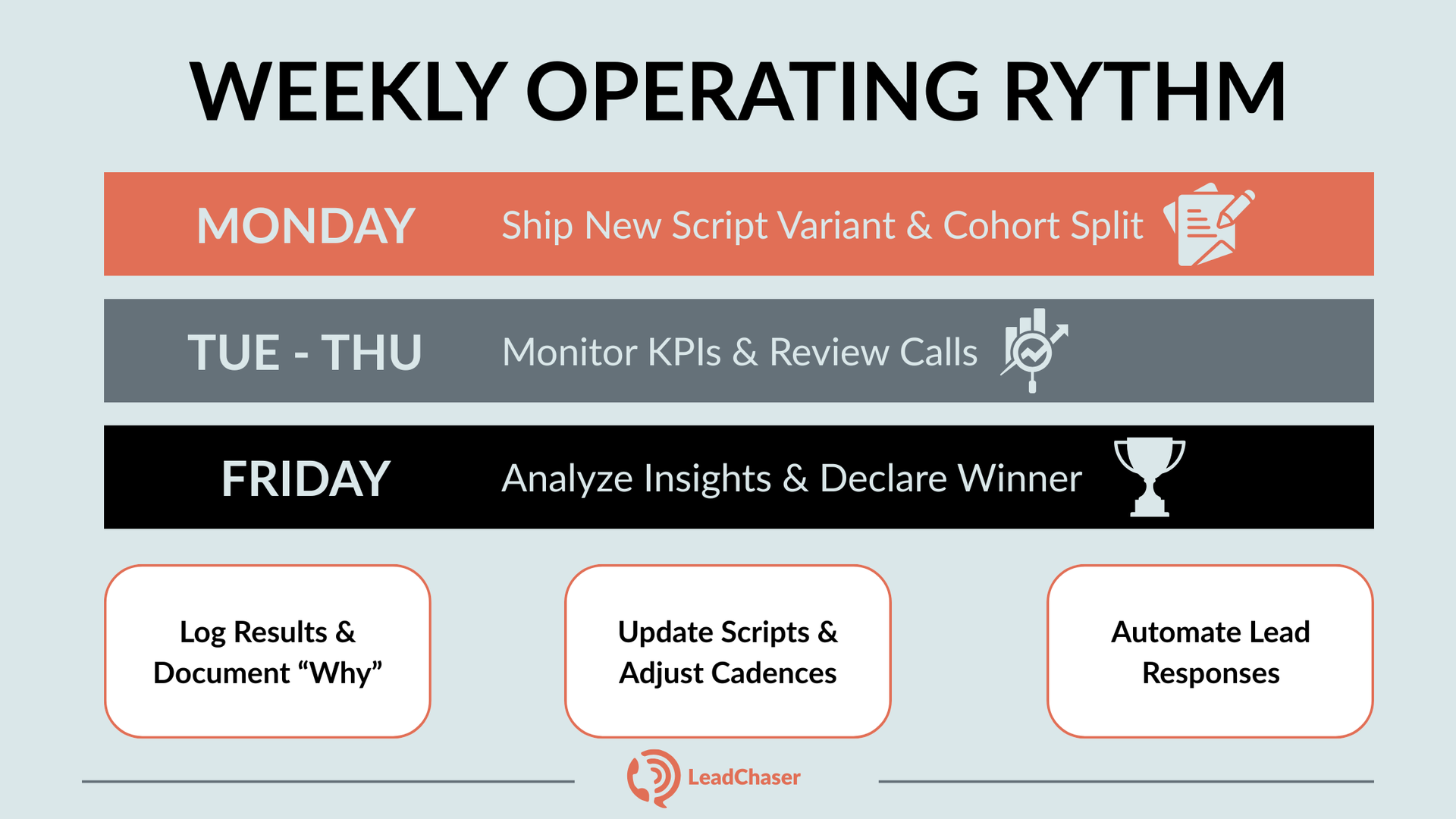

Weekly operating rhythm

- Monday: Ship one new script variant; confirm cohort splits by region and persona

- Tue–Thu: Monitor KPIs; spot-check calls for qualitative patterns

- Friday: Review CI insights; declare a winner if thresholds are met

- Document: Log the test, outcome, and the “why” in your tracker

- Deploy: Update the master script; retrain; adjust your different follow up cadences

- Automate: Configure workflows to automate lead responses with the new language

The best teams run phones like an experiment and treat scripts as living assets. Over a quarter, this cadence builds a durable, region-aware playbook anchored in sales script testing, powered by artificial intelligence sales tools, and scaled by automation.

Your core stack (and where LeadChaser fits)

- Conversation intelligence to transcribe, tag, and analyze calls

- Dialer/engagement platform to run tests consistently

- CRM to capture outcomes and attribute revenue

- Automation to automate lead responses and orchestrate different follow up cadences

- Experiment log and dashboard to standardize sales script testing

If you want this integrated with real-time AI, explore LeadChaser’s capabilities:

- LeadChaser Overview

- Features for Testing and Automation

- Resource Hub and Best Practices

- Pricing and Plans

Implementation blueprint: from first test to scaled program

1. Define your north-star metric and test backlog:

- Metric: meetings booked per 100 conversations

- Backlog: openers, CTAs, discovery, objection handling, voicemail scripts, AI bot prompts, different follow up cadences, and rules for how to nurture leads

- Assign owners and target dates for each cycle of sales script testing

2. Clean your data plumbing:

- Auto-tag the script variant on every call

- Configure CI to capture talk/listen, sentiment, and objection labels

- Ensure CRM fields exist to store cadence ID and whether you automate lead responses

3. Run your first two tests:

- Test A: Two openers

- Test B: Two CTAs at close

- Cohorts: North America and EMEA, equal mixes; rotate by rep daily

4. Review and decide:

- Threshold: +20% lift vs baseline or 95% confidence if volumes allow

- CI review: listen to 10 calls per variant; log 3–5 language patterns you’ll keep or cut

5. Codify and coach:

- Update the master script; deliver a 20-minute training

- Record two example calls to model the new standard

6. Extend into follow-up:

- Ship two different follow up cadences aligned to the winning scripts

- Automate lead responses based on outcomes (voicemail left, “send info,” soft interest)

7. Scale with AI:

- Mirror the winning human script in your bot

- Run sales script testing again, now with bot variants, to learn even faster

- Use artificial intelligence sales tools for alerting and coaching prompts

8. Repeat weekly:

- Add one new variable to the queue

- Archive results and build your playbook chapter by chapter: openers, CTAs, discovery, objections, voicemail, different follow up cadences, and how to nurture leads by segment

Cheat sheet: signals to watch (fast diagnostics)

| Signal | What It Suggests | Action | Primary Metric | Volume & Window |

|---|---|---|---|---|

| Low answer rate | Timing, GEO, or caller ID trust issue | Shift windows, test local presence, re-split cohorts | Meetings/100 conversations | 100 per variant, 1 week |

| High talk time (>60%) | Over-talking, weak questions | Add discovery prompts, coach, and retest opener | Calendars sent within 5 min | 80 per variant, 1 week |

| Objection spike | Phrase triggers concern (price, time) | Reword value prop and CTA; re-run sales script testing | Meetings held rate | 2 weeks, equal GEO mix |

| Slow next step | Manual follow-up bottlenecks | Automate lead responses and adjust different follow up cadences |

FAQ: detailed answers to long-tail questions

Q1) How do I optimize AI sales scripts using call data?

Clone your best-performing human script into your bot, then run tightly scoped experiments on opener phrasing, question order, or CTA timing. Track appointments per 100 conversations and talk-time distribution, and review sentiment and objections with CI. Ship the winner to both bots and reps for consistency, and immediately automate lead responses using the exact phrases that converted.

This is disciplined sales script testing through artificial intelligence sales tools, and it pairs naturally with experiments across different follow up cadences and decisions on how to nurture leads post-call.

Q2) What data should I use to improve my caller success rate?

Use a blended view: meetings per 100 conversations, meetings held, conversion by persona and region, talk-to-listen ratio, interruption patterns, time-to-next-step, and objection themes tied to phrasing.

Feed those insights into your sales script testing backlog, and use them to choose different follow up cadences. Then automate lead responses based on outcome tags; that single change often becomes a cornerstone of how to nurture leads effectively.

Q3) What’s the best way to test different sales dialogues?

Limit variables and define the conversation arc before testing. For instance, compare an insight-led opening against a pain-led opening, or run two discovery paths tailored to distinct industries. Capture 100 conversations per variant, evaluate with CI to see why one flow wins, then update enablement and the bot.

Use artificial intelligence sales tools to reinforce the winning flow in real time, and to consistently automate lead responses within your chosen different follow up cadences, all aligned with your standards for how to nurture leads.

Q4) How do I balance regional differences with global consistency?

Start with a global baseline and run regional sales script testing on opener and CTA. Standardize discovery and objection handling, but adapt wording, timing, and channel mix by market. Measure which different follow up cadences perform in each region and encode how to nurture leads accordingly. Use artificial intelligence sales tools to localize content and automate lead responses with appropriate tone and language.

Q5) How fast should I expect results?

With healthy volume, you can get directional results in a week. Small lifts compound quickly, especially when you combine clean sales script testing, CI insights, and workflows that automate lead responses. Reinforce wins by aligning your different follow up cadences to the new language and continue refining how to nurture leads across personas and regions.

Putting it all together: your next best move

If you do one thing this week, do this: pick your opener and run a disciplined A/B. Tag every call, review 10 recordings per variant, and let the data pick the line. Update your script, train the team, align your different follow up cadences to that language, and automate lead responses the moment a conversation ends. Then repeat with the close, the discovery sequence, and objection handling.

This cadence compounds. It’s how leaders turn phones into an experiment engine, sharpen how to nurture leads with precision, and make artificial intelligence sales tools a force multiplier.

Ready to operationalize this with real-time AI and a stack built for iteration? Explore how

LeadChaser helps you run high-integrity sales script testing, localize at scale, and automate lead responses without losing the human touch.

See Features, browse our Blog, and choose a Plan that gets your team shipping experiments this week.

Works Cited

Baker, Tim Stobierski. “What Is A/B Testing? Definition, Examples, and Benefits.” Harvard Business School Online, 2 Aug. 2023, https://online.hbs.edu/blog/post/what-is-ab-testing.

SalesHive Team. “A/B Testing Cold Calling Scripts for Better Results.”

SalesHive, 2024,

https://saleshive.com/blog/ab-testing-cold-calling-scripts-better-results.

“How Conversation Intelligence Brings the ‘Why’ to A/B Testing.”

InMoment, 2023,

https://inmoment.com/blog/ci-insight-for-ab-testing.

“Conversation Intelligence for Sales Teams: What It Is and How to Use It.”

Arist, 2024,

https://www.arist.co/post/conversation-intelligence-sales-teams.

“Improving Complex B2B Sales Calls with Conversation Intelligence Tools.”

Insight7, 2024,

https://insight7.io/improving-complex-b2b-sales-calls-with-conversation-intelligence-tools.

Hern, Alex. “Microsoft Saved $500 Million by Using AI in Its Call Centers Last Year — and It’s a Sign of Things to Come.”

ITPro, 2024,

https://www.itpro.com/business/business-strategy/microsoft-saved-usd500-million-by-using-ai-in-its-call-centers-last-year-and-its-a-sign-of-things-to-come-for-everyone-else.

McKittrick, Mary Shea, et al. “What I Learned About Sales Technology in 2024.”

Forrester, 2024,

https://www.forrester.com/blogs/what-i-learned-about-sales-technology-in-2024.

“Cold Call Conversion Rates: Top Success Rates for 2025.”

PowerDialer, 2025,

https://www.powerdialer.ai/blog/cold-call-conversion-rates-top-success-rates-for-2025.